HTTP Streaming in Val Town

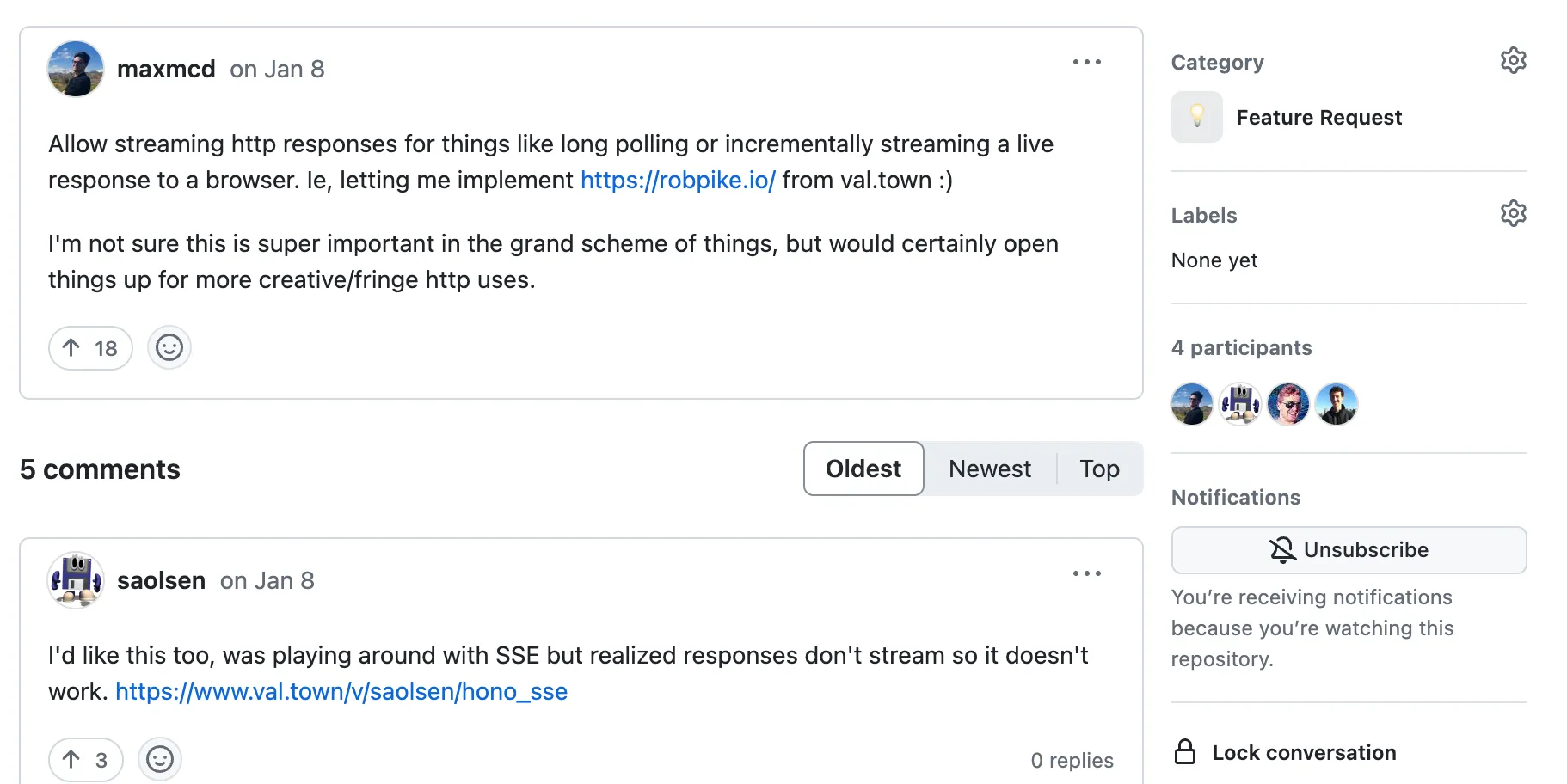

Val Town now supports HTTP streaming, our most-requested feature.

You may recognize me as the guy who originally requested that feature. Now I work here and I shipped it!

Streaming opens up many new use-cases: streaming LLM responses, large requests and responses, and server-sent events.

Streaming LLM Responses

LLMs are a classic use-case for streaming: they send responses word-by-word as they’re generated. Here is a minimal example of a streaming OpenAI response: OpenAPI’s SDK lets us request a streaming response by specifying stream: true. They return an AsyncIterator and we turn that into a ReadableStream for our response.

import { OpenAI } from "https://esm.town/v/std/openai";

export default async function (req: Request): Promise<Response> { const openai = new OpenAI(); const stream = await openai.chat.completions.create({ stream: true, messages: [ { role: "user", content: "Write a poem in the style of beowulf about the DMV", }, ], model: "gpt-3.5-turbo", max_tokens: 2048, }); return new Response( new ReadableStream({ async start(controller) { for await (const chunk of stream) { controller.enqueue( new TextEncoder().encode(chunk.choices[0]?.delta?.content) ); } controller.close(); }, }), { headers: { "Content-Type": "text/event-stream" } } );}It’s a little bit of a dance to connect the two tools together, but the result is fun:

Fork on Val Town | View live output

The request limit is now 100mb

We’ve increased the limit on request body size from 2mb to to 100mb. Here’s an example val that returns the size of the request body without holding all of it in memory:

export default async function (req: Request): Promise<Response> { if (req.method !== "POST") { return new Response("Method not allowed", { status: 405 }); } const reader = req.body.getReader(); let totalBytes = 0; console.log(performance.now()); while (true) { const { done, value } = await reader.read(); if (done) break; console.log(value.byteLength, performance.now()); totalBytes += value.byteLength; } return new Response(`${totalBytes}`);}If we upload a large file to that endpoint it will respond as we’d expect:

$ curl --data @image.png https://maxm-reportbodysize.web.val.run66799801Unlimited response sizes

We used to buffer and store responses, which imposed a 10mb limit on how much send from an HTTP val. Now we don’t, and you can send very big responses:

In the example below we proxy the latest 130mb supernovae image from the Webb Telescope. The client can begin downloading the image before the server has finished downloading it.

export default async function (req: Request) { return fetch( "https://live.staticflickr.com/65535/53782948438_9b85e57a6c_o_d.png" );}

Fork on Val Town | View live output

Server-Sent Events

Server-Sent Events are kind of like a one-way WebSocket. The ChatGPT example above actually used server-sent events. They’re useful when you want to work with a stream of discrete events, rather than a continuous stream of data. Here’s an example of constructing a server-sent event stream manually to make a clone of robpike.io.

const msg = new TextEncoder().encode("💩");const initialDelay = 20;export default async function (req: Request): Promise<Response> { let timerId: number | undefined; const body = new ReadableStream({ start(controller) { let currentDelay = initialDelay; function writeToStream() { currentDelay *= 1.03; controller.enqueue(msg); timerId = setTimeout(writeToStream, currentDelay); } writeToStream(); }, cancel() { if (typeof timerId === "number") { clearInterval(timerId); } }, }); return new Response(body, { headers: { "Content-Type": "text/event-stream", }, });}Fork on Val Town | View live output

For a more complex application using server-sent events, check out my little ChatGPT clone.

Under the hood

We had to entirely reorient the way we run user code to support streaming.

Previously, both requests and responses were read into buffers before being sent to and from the val. This had both speed and scalability issues, as we wouldn’t want to buffer a very large response in memory. Now, we only communicate with user code over HTTP.

With HTTP vals, we proxy the incoming request straight to the worker. This way we don’t mess too much with the request structure and you’ll see a request lifecycle that is very similar to running your own HTTP server.

If you’re running a script, email, or cron val we send an HTTP request with the relevant information and wait for a response. Now that we’re talking HTTP, this paves the way for future support for websockets.

We built most of this functionality in a library called deno-http-worker, which we’ve open sourced on GitHub.

And let this be a lesson to you: be careful what features you wish for – you just may end up joining the company and building them.

Go forth and stream

We’re excited to see what you build! Here’re a few more examples to inspire your next val:

- maxm/multiplayerCircles - a collaborative canvas of movable circles that polls the database on the backend and streams changes to the frontend.

- thesephist/webgen - ask chatGPT to generate a website and watch it render bit by bit in front of your eyes. (watch this built live on Steve’s live stream)

- maxm/asciiNycCameras - streaming ASCII videos of NYC traffic cameras.